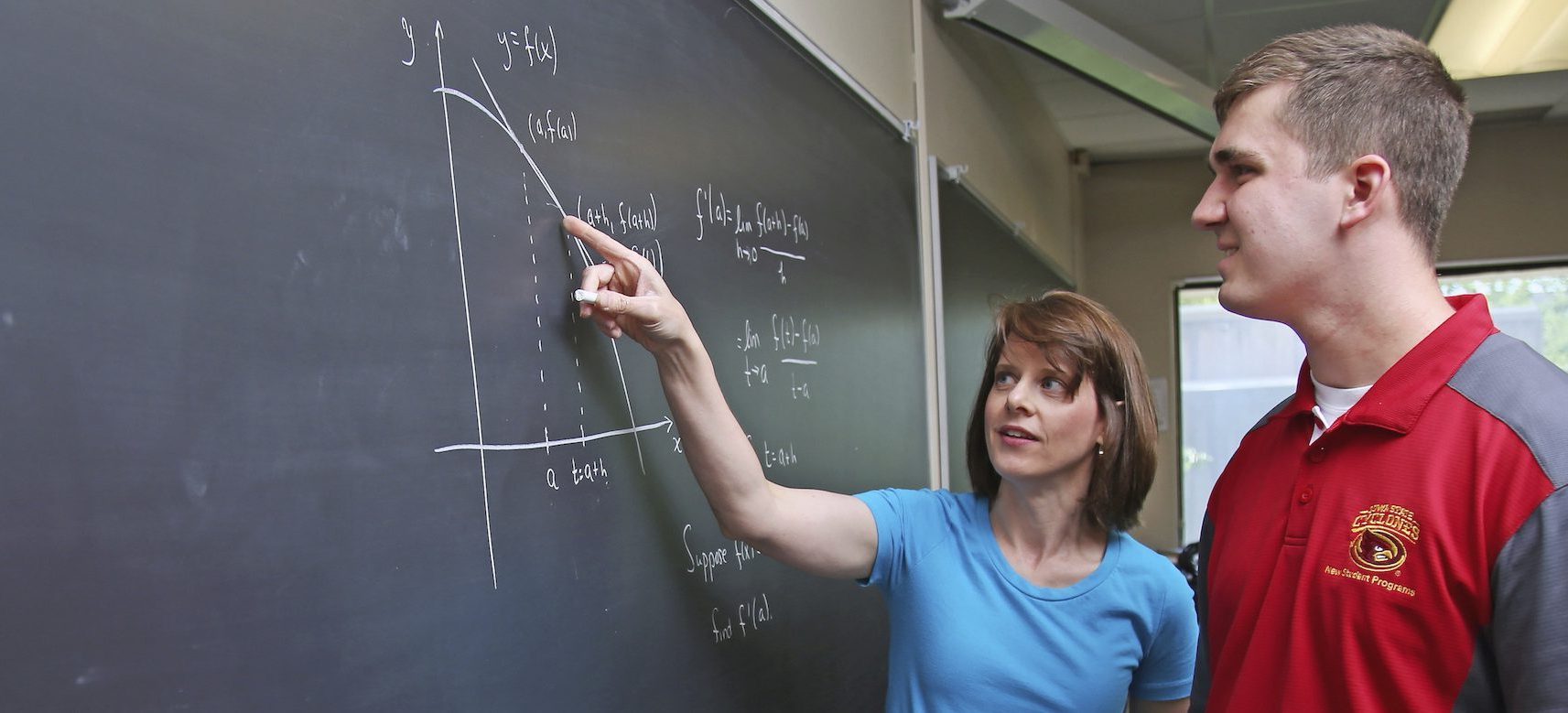

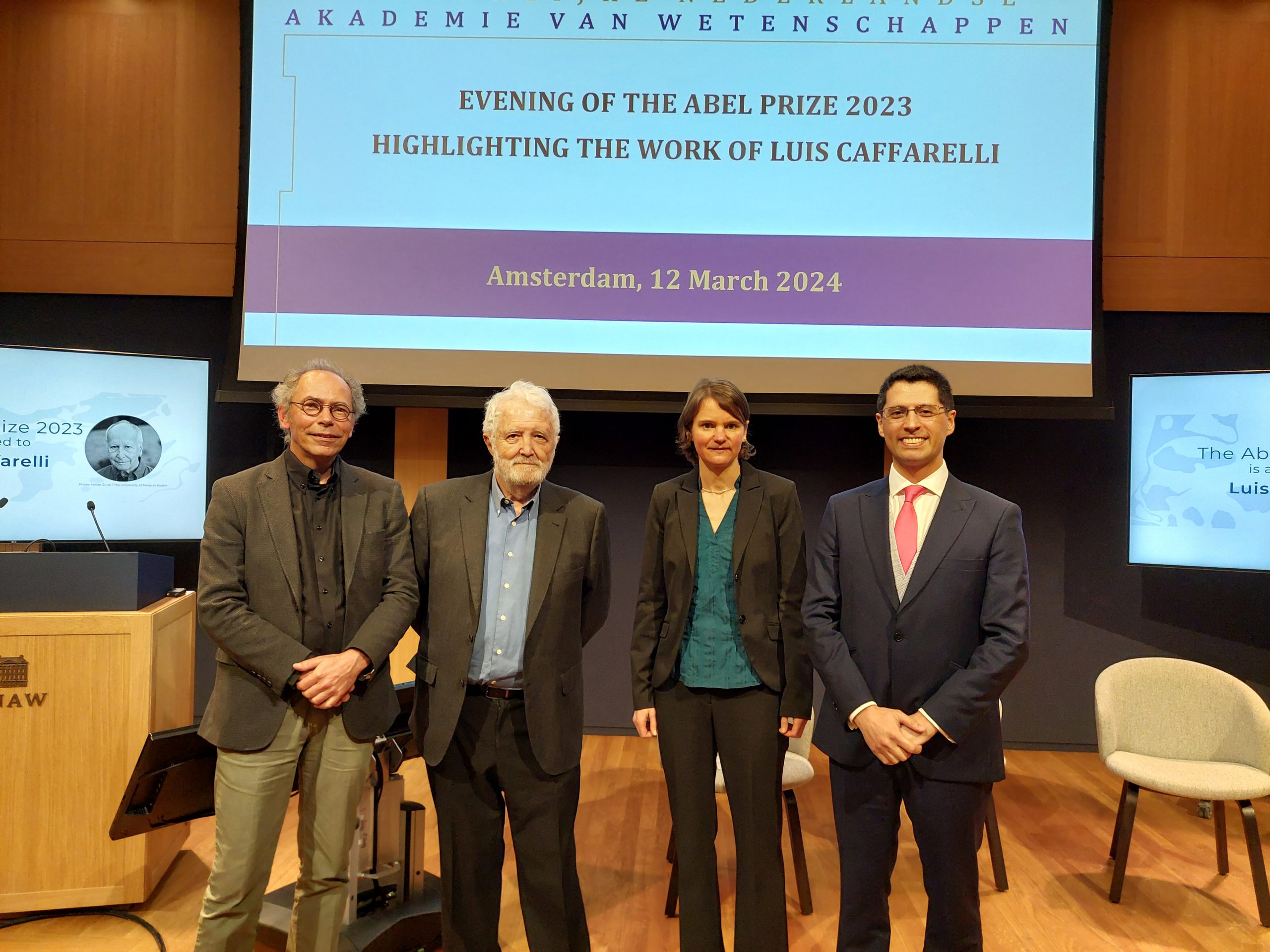

Research

Faculty members maintain well-funded research programs in several areas. Undergraduate and graduate students have opportunities to participate in research and explore mathematics with some of the most well-respected instructors in their field.